Neural Networks and Deep Learning

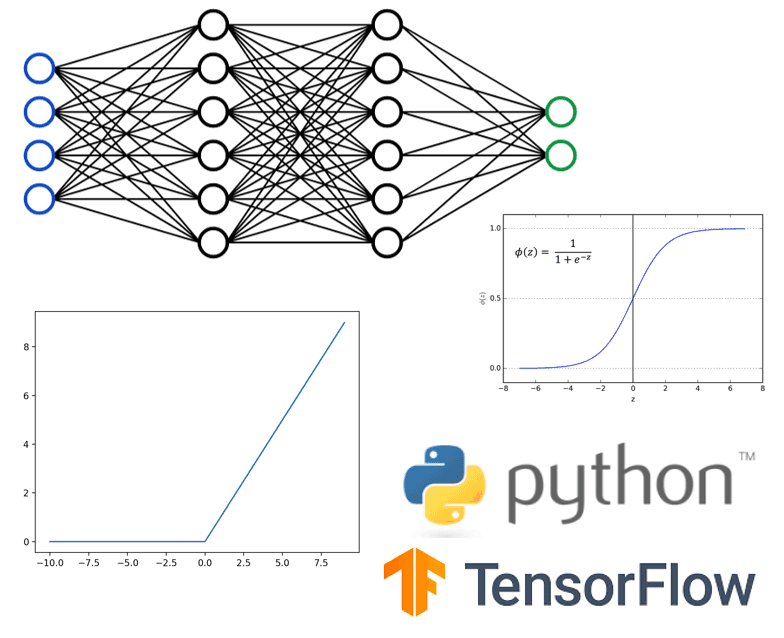

Neural Networks and Deep learning was the first course I took as part of the Artificial Intelligence specialisation track. Since it was also the first time I was dealing with neural networks after the Udemy course I had done on TensorFlow, I was very excited to learn more about them and do the coding assignments to see the concepts in action.

The first half of the course was more of the preliminary mathematics such as linear algebra and matrices. These concepts built further on those taught in the first year course on Engineering Mathematics and were stressed upon while teaching us the perceptron model, multi-perceptron networks and basic multi-layer networks. The concepts of the weights, biases, activations and gradient descent with their mathematical equations and derivations were also discussed in detail.

The second half dealt with more advanced and deep networks such as ANNs, CNNs and RNNs. Within RNNs, LSTM and GRU networks were touched upon. The content built on the understanding from the first half so it was essential to be on top of the lecture schedule in this course because if I lost track on the lecture pace I would be lost in the next lecture since it builds on the previous lecture's concepts.

The assessment was divided into two individual assignments and a group project. There was one assignment for the first and one assignment for the second half. The first assignment dealt with one multi-class classification problem and one regression problem. The second assignment was related to object recognition and text classification. The requirement for both assignments was to design neural networks as per the given parameters, perform experiments on the training dataset and report the results from the test dataset. The report was also to be written like an academic report with sections on background, methodology and experiments with the results.

The assignments were doable in that the problem statements were quite clear and the data was cleaned and no additional preprocessing was needed. However, given the random nature of neural networks it was very difficult to verify the results since everyone I knew in the course had different results as we all had different approaches. This meant that there was room for uncertainty before submitting the assignments; something I was not comfortable with. However there was no other option so I submitted it anyway. My grades for the assignments were similar to my peers which was reassuring. I was also confident that I could improve my overall score through the course project.

The project groups were to be selected by the students but I was unable to form one since the classes were 100% online. Upon my request, the course instructor formed a group for me with two other students. The project was to be selected from Kaggle and we were allowed to select any challenge of our choice. Deciding the project topic was itself a challenge since all three group members had different thoughts on the plan of action. One of the members wanted to do a very complicated project and the other wanted to do a text analysis project which none of us were confident in. I wanted to play by our strengths since we had very limited time and wanted to do a Computer Vision related project which all of us had experience with. I tried my best to convince the group of my reasoning which was to prioritise getting a good grade in the module since all of us had room for improvement in our assignments. We needed to have a good project to give us that boost to get a good final grade. So finally, we decided to go with a Computer Vision related project titled Plant Pathology Analysis. The project was a multi-class classification problem that aimed to determine which disease a plant has by examining images of its leaves.

The salient features of the project are that we performed several passes of experiments with each introducing a new feature until we achieved our best performing model. We performed grid search, experimented with Dropouts, optimisers, callbacks such as Early Stopping and Reduced Learning Rate, and also added various kinds of data augmentation to help solve the overfitting of the models.

Despite our best efforts, the results with models we created were not up to the mark so we also performed further experiments with models such as EfficientNet. The overall experience with the module was quite positive and although my final grade was not an A I learnt a lot of valuable concepts and techniques to be applied in future modules and projects.

Keywords

- Linear Algebra

- Neural Networks

- Kaggle Competition

- Python

- TensorFlow

- Group Project Experience